In today’s tech world, text-to-video (T2V) generative AI has emerged as a hot topic, sparking considerable excitement and debate. While the technology is still in its infancy, the potential is immense. Advancements in T2V are poised to revolutionize content creation, with capabilities that could, quite literally, take our breath away. Think of it as a journey into uncharted territory where possibilities abound, much like the whimsical worlds imagined by Dr. Seuss.

A Glimpse of What’s Possible with OpenAI’s Sora Turbo

The latest buzz in T2V comes courtesy of OpenAI’s newly launched Sora Turbo, a successor to its earlier model, Sora. Much like its cousin ChatGPT, Sora Turbo is available exclusively to ChatGPT Plus and Pro users, making it a premium, pay-to-play product. While this exclusivity might draw some sighs, the tool’s capabilities are undeniably compelling.

ChatGPT currently boasts a staggering 300 million weekly active users. Even if only a fraction of them adopt Sora Turbo, it’s set to become a dominant player, potentially overshadowing competitors. With so much attention and a vast user base, Sora Turbo’s impact on the T2V landscape is hard to overstate.

From Text-to-Text to Text-to-Video: The Evolution of Generative AI

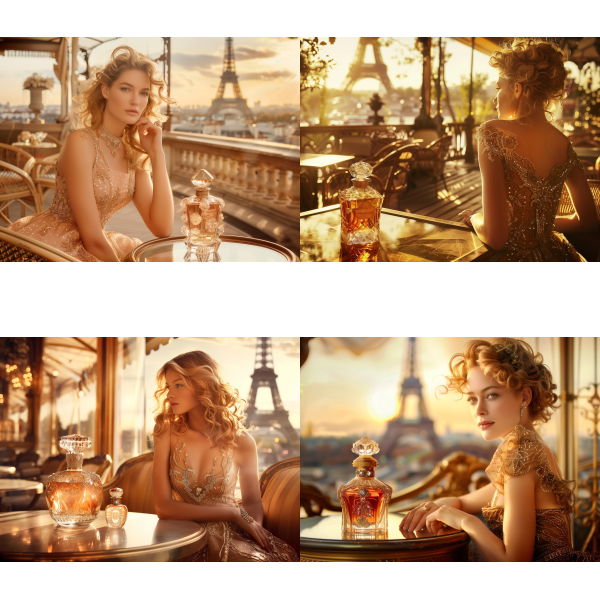

Generative AI began with text-to-text (T2T) capabilities, enabling users to input text prompts and receive responses like essays, poems, or narratives. Next came text-to-image functionality, where prompts generated images ranging from photorealistic depictions to abstract digital art. Now, the field has expanded to T2V, offering a dynamic new frontier for content creation.

The ultimate goal? Seamless X-to-X generative AI—technology capable of transforming any input medium (text, image, audio, video) into any output medium. Sora Turbo represents a significant step toward this vision, allowing users to create short, visually impressive videos based on text prompts.

The Challenges of Suitability in T2V

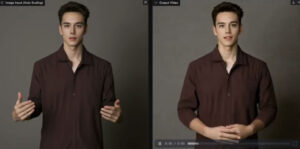

The biggest hurdle in T2V is achieving suitability—ensuring the AI-generated video aligns closely with the user’s intent. For example, imagine prompting the AI to create a video of a cat wearing a hat, sitting in a box, and riding a moving train. The AI must interpret these elements correctly, but different users might have vastly different visions of the scene’s style, colors, or motion.

This challenge stems from the inherent subjectivity of human imagination. Despite advances, no T2V system, including Sora Turbo, has fully solved the issue of suitability. Current models still require refinement to produce faithful representations of user prompts.

Breaking Down Sora Turbo’s Capabilities

OpenAI’s Sora Turbo demonstrates impressive advancements but still faces challenges common to T2V systems. Here’s an overview of its strengths and areas for improvement:

- Visual Quality and Resolution: Early T2V videos were low-resolution and clunky, but Sora Turbo delivers state-of-the-art visuals. Its vivid imagery earns an A-/B+ rating for quality.

- Temporal Consistency: Ensuring smooth transitions between video frames is vital. Sora Turbo shows progress here, though occasional inconsistencies remain, warranting a B grade.

- Object Permanence: Maintaining the continuity of objects (e.g., a cat’s hat staying visible across frames) is a challenge. Sora Turbo scores a B-/C+ in this area.

- Scene Physics: Generating videos that adhere to natural laws, such as gravity or collision effects, is a complex task. Sora Turbo’s performance here also earns a B-/C+ rating.

- Feature Set: Sora Turbo offers a robust array of features, including stylistic options, prompt libraries, video storage, and more. Its capabilities are rated A-/B+ overall.

T2V’s Emerging Role in Society

The potential of T2V extends far beyond entertainment. In the future, creating Hollywood-quality videos using AI could become accessible to virtually anyone. This disrupts traditional filmmaking and raises questions about authorship and creativity. Is the prompt writer the true filmmaker? Or does the AI deserve co-credit?

Even more pressing are concerns over deepfakes. Advanced T2V tools could enable the creation of hyper-realistic videos at scale, making it difficult to distinguish real content from fabricated material. This poses risks for misinformation, fraud, and societal trust.

Navigating the Ethical Challenges of T2V

To address these issues, AI developers are implementing safeguards, such as watermarking AI-generated content and restricting certain outputs (e.g., videos featuring political figures). However, these measures are not foolproof, and bad actors continually find ways to circumvent them.

OpenAI acknowledges the importance of ethical considerations. In its official announcement of Sora Turbo, the company emphasized the need for societal collaboration to establish norms and safeguards: “We’re introducing our video generation technology now to give society time to explore its possibilities and co-develop norms and safeguards that ensure it’s used responsibly as the field advances.”

The Road Ahead

Text-to-video generative AI holds incredible promise, but it also brings profound challenges. As tools like Sora Turbo evolve, society must grapple with questions of ethics, law, and responsibility. By fostering informed discussions and collective action, we can guide this transformative technology in a direction that benefits humanity.

The future of T2V is bright, but it’s up to us to ensure it’s also responsible.

Validate your login

Sign In

Create New Account