Runway has unveiled a groundbreaking “Expand Video” feature, enabling users to extend video frames seamlessly while preserving visual consistency. This new tool, part of the Gen-3 Alpha Turbo suite, is being gradually rolled out and will soon be available to all users. Designed for creative flexibility, the feature supports text prompts and guiding images, allowing users to craft imaginative extensions to video content. Additionally, users can chain multiple expansions together to create dynamic cinematic effects such as crash zooms and pull-out reveals from static footage. Comprehensive tutorials are available at Runway Academy to help users master these advanced capabilities.

Runway’s Evolution in Video Technology

In October, Runway introduced Act-One, a Gen-3 Alpha tool designed to generate expressive character performances. Earlier, the company partnered with Lionsgate, the studio behind John Wick, granting exclusive access to Runway’s AI tools for content creation. This partnership set a new benchmark in the entertainment industry, distinguishing Runway from competitors like OpenAI’s Sora.

Speculation is rife about potential collaborations between Disney and OpenAI for AI-driven projects. Meanwhile, Promise, a studio backed by Andreessen Horowitz, is leveraging generative AI to produce shows and movies. Runway’s tools also contributed to the Oscar-winning film Everything Everywhere All at Once, streamlining special effects production, cutting costs, and reducing manual workload. Despite its consistent feature rollouts, there is still no official update on OpenAI’s Sora since its earlier announcement this year, with rumors suggesting a potential launch post-elections.

Rising Competition in AI Video Tools

The global landscape of AI video tools is heating up. Chinese platforms like Kuaishou’s Kling have emerged as significant competitors, offering realistic motion simulations, 3D face and body reconstructions, and user-trained AI characters. Kling’s advanced features have earned widespread acclaim. MiniMax, another Chinese platform specializing in text-to-video generation, is also recognized for its high-quality outputs. While access to tools like Kling, Sora, and Luma’s Dream Machine remains limited, Singapore-based Pollo AI by HIX.AI is working to democratize AI video generation for broader audiences.

API Access: Bringing Gen-3 Alpha Turbo to Companies

Runway is further expanding its reach by making its most advanced video generation model, Gen-3 Alpha Turbo, available to organizations via an API in early access. This move allows companies to integrate Runway’s video generation capabilities directly into their platforms, applications, and workflows. With this integration, advertising teams, for instance, can create marketing videos seamlessly within existing tools.

Though not yet universally available, interested companies can join a waitlist to gain early access. Runway plans to gather feedback from initial users before a broader rollout in the coming weeks. The API marks a significant step in Runway’s mission to make its tools more accessible, enabling professionals and developers to produce high-quality video content efficiently.

The API will be available through two subscription plans tailored for individuals, small teams, and enterprises. Pricing is set at one cent per credit, with five credits required to generate one second of video. Using the Gen-3 Alpha Turbo model, users can create videos of up to 10 seconds for 50 cents per clip. Previously, the model was accessible exclusively via the Runway platform, making the API a notable expansion of availability.

Empowering Video Creation Through Advanced Tools

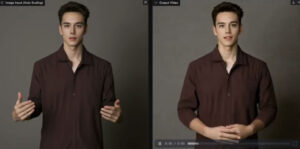

Runway’s suite of tools aims to simplify video creation for professionals and hobbyists alike. Features such as automated rotoscoping and motion tracking reduce the time and skill required for complex tasks. For instance, the automated rotoscoping tool quickly separates foreground elements from backgrounds, a task traditionally requiring extensive effort and expertise. Meanwhile, motion tracking allows users to apply effects to moving objects or people in videos effortlessly using text-based prompts.

Staying Ahead in the Generative AI Video Space

Runway’s advancements continue to outpace competitors like OpenAI and Google DeepMind, which have yet to release comparable video generation models. The recent introduction of the “Video to Video” capability exemplifies Runway’s commitment to innovation. This feature allows users to replicate existing videos with new aesthetic directions simply by uploading a source video and inputting their desired modifications.

The company’s progress has garnered significant interest. In July, reports emerged that Runway was exploring a $450 million funding round at a valuation of $4 billion. This funding would accelerate model development and support the expansion of its developer, sales, and go-to-market teams. However, Runway faced some controversy when a leaked document revealed the company had used content from thousands of YouTube creators and pirated films to train its models.

Conclusion

With the launch of its “Expand Video” feature and the rollout of API access for its Gen-3 Alpha Turbo model, Runway is cementing its position as a leader in generative AI video tools. As the company continues to innovate, its impact on the entertainment industry and creative workflows is undeniable. Despite growing competition and occasional challenges, Runway’s forward momentum positions it as a driving force in shaping the future of video content creation.

Validate your login

Sign In

Create New Account