Artificial intelligence (AI) is rapidly transforming diverse fields, ranging from robotics to medicine to political science, by enabling systems to make meaningful decisions in complex scenarios. These applications hold immense potential, such as improving urban traffic flow, enhancing medical diagnoses, and optimizing supply chains. For instance, an AI system designed to control traffic in a congested city could reduce travel times for motorists while simultaneously enhancing safety and sustainability.

However, the journey to creating AI systems capable of making good decisions is fraught with challenges. One of the primary hurdles lies in teaching these systems to adapt and perform effectively across diverse and variable tasks. This challenge becomes particularly evident when using reinforcement learning (RL), a popular approach for training AI decision-making systems.

The Challenge of Variability in AI Training

Reinforcement learning models, which learn by trial and error to optimize their performance, often struggle to generalize beyond the specific tasks they were trained on. For example, in the context of traffic management, a model trained to optimize traffic flow at a particular intersection may falter when applied to intersections with different configurations, such as varying speed limits, lane counts, or traffic patterns. Even minor variations in the task environment can significantly undermine the model’s performance.

To address this limitation, researchers at MIT have developed an innovative algorithm designed to improve the reliability of reinforcement learning models in handling complex tasks with inherent variability. This breakthrough not only promises to enhance the performance of AI systems but also reduces the computational cost of training them.

A Smarter Algorithm for Task Selection

The new algorithm introduced by MIT researchers strategically selects the most impactful tasks for training an AI agent. This selective training approach allows the AI to effectively generalize across a collection of related tasks, maximizing performance while minimizing the training cost. For example, in the case of traffic signal control, the algorithm identifies a subset of key intersections that contribute the most to overall effectiveness, focusing on these to train the model.

By prioritizing tasks that provide the greatest boost to the model’s overall performance, the algorithm streamlines the training process, ensuring the AI agent performs well across the entire task space. This method contrasts sharply with traditional approaches that either train a model for each task individually or use a single model trained on data from all tasks.

Efficiency Gains and Performance Boosts

The MIT team’s technique has demonstrated remarkable improvements in efficiency. When tested on an array of simulated tasks, including traffic signal control, real-time speed advisories, and classic control challenges, the algorithm proved to be five to 50 times more efficient than standard methods. This efficiency translates to substantial savings in data and computational resources. For instance, an algorithm that achieves a 50x efficiency boost can learn from just two tasks and achieve the same performance as a standard method trained on 100 tasks.

“From the perspective of the two main approaches, that means data from the other 98 tasks was not necessary, or that training on all 100 tasks is confusing to the algorithm, so the performance ends up worse than ours,” explains Cathy Wu, senior author of the study. Wu is the Thomas D. and Virginia W. Cabot Career Development Associate Professor in Civil and Environmental Engineering (CEE) and the Institute for Data, Systems, and Society (IDSS), as well as a member of the Laboratory for Information and Decision Systems (LIDS).

Finding the Sweet Spot in Training

Traditionally, engineers face a dilemma when training AI systems for tasks like traffic light control. One option involves training a separate algorithm for each intersection using only its data, which demands vast computational resources. The other option trains a single, generalized algorithm on data from all intersections, often resulting in suboptimal performance due to the model’s inability to account for task-specific nuances.

The MIT team’s method strikes a balance between these extremes by leveraging a technique called zero-shot transfer learning. This approach allows an AI model trained on one task to perform well on a related task without additional training. By strategically selecting tasks that maximize overall performance gains, the researchers’ algorithm, named Model-Based Transfer Learning (MBTL), optimizes the training process.

How MBTL Works

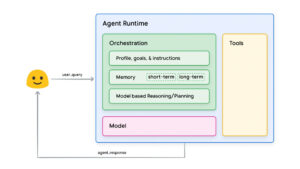

MBTL operates by modeling two key aspects of AI training:

- Individual Task Performance: It evaluates how well an algorithm would perform if it were trained independently on a specific task.

- Generalization Performance: It estimates how much the algorithm’s performance would degrade when applied to a different task, a crucial measure of its ability to generalize.

Using these insights, MBTL sequentially selects tasks that offer the highest performance improvements, focusing first on those with the greatest potential and gradually adding tasks that provide marginal benefits. This targeted approach reduces unnecessary training, making the process more efficient and effective.

Real-World Implications

When applied to simulated environments, MBTL’s ability to identify the most valuable training tasks has shown impressive results. For example, in the realm of traffic management, the algorithm can achieve high levels of performance by training on a minimal subset of intersections, bypassing the need for exhaustive data from every intersection in a city.

Such efficiency not only reduces training costs but also accelerates the deployment of AI systems in real-world applications. “We were able to see incredible performance improvements with a very simple algorithm by thinking outside the box,” Wu says. “An algorithm that is not very complicated stands a better chance of being adopted by the community because it is easier to implement and easier for others to understand.”

Looking Ahead

The researchers’ success with MBTL opens the door to tackling even more complex problems. Future iterations of the algorithm aim to address high-dimensional task spaces and other intricate challenges. Moreover, the team is eager to apply their approach to practical, real-world scenarios, particularly in next-generation mobility systems.

The broader implications of this research extend to fields beyond traffic management. By making reinforcement learning more efficient and adaptable, MBTL could revolutionize how AI systems are trained for a wide range of applications, from healthcare to autonomous vehicles to smart infrastructure.

This groundbreaking work, which will be presented at the Conference on Neural Information Processing Systems, was supported by a National Science Foundation CAREER Award, the Kwanjeong Educational Foundation PhD Scholarship Program, and an Amazon Robotics PhD Fellowship.

Validate your login

Sign In

Create New Account