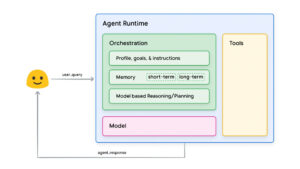

ByteDance, the company behind TikTok, just unveiled their latest leap into the AI video space: Seaweed-7B. Don’t let the name fool you — “Seaweed” is short for Seed-Video, and the “7B” refers to its ~7 billion parameter diffusion transformer architecture.

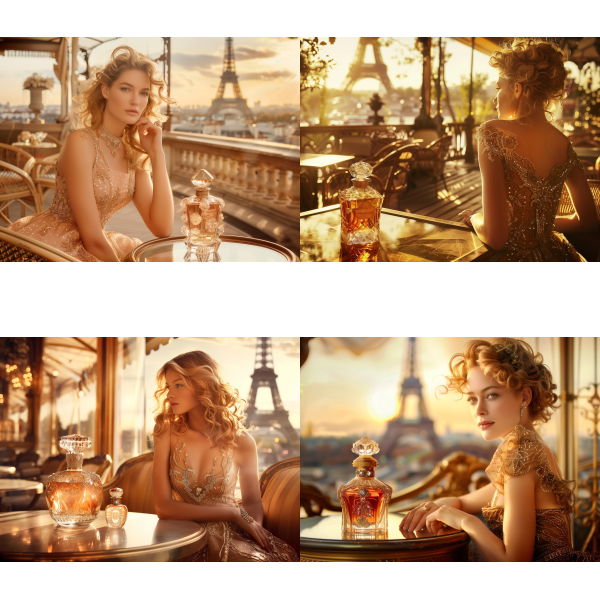

Like most modern AI video models, Seaweed-7B handles the usual suspects:

- Image-to-video generation

- Video from reference images

- Semi-realistic human characters

- Multiple shot generation

- High-resolution outputs

But here’s where Seaweed-7B separates itself from the pack…

5 Things Seaweed-7B Can Do That No One Else Can

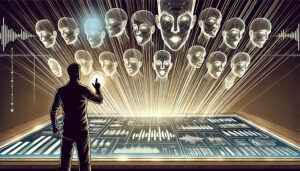

1. Generates Both Audio and Video — Together

Until now, most AI workflows required stitching audio after video generation. And while there’s been experimentation around audio-to-video generation, it’s still early days.

Seaweed-7B flips the script. It generates both simultaneously — meaning music, ambiance, voice, and video arrive as a cohesive unit. If you’re building for immersive experiences, that’s a big deal.

2. Native Long-Form Video (25+ Seconds)

Yes, technically, AI-generated videos longer than 25 seconds have been around. But those often rely on stitching tricks or looped extensions.

Seaweed-7B’s demo showed a native 25-second clip in one shot — not extended, not patched. That’s significant. For context: OpenAI’s Sora has teased minute-long clips in demos, but public access still maxes around 20 seconds.

ByteDance is setting a new benchmark for default output length — high-quality, long-form video from a single prompt.

3. Real-Time Generation at 720p, 24fps

This one’s a genuine game-changer.

Seaweed-7B reportedly runs real-time generation at 1280x720 resolution, delivering 24 frames per second — live.

That opens the door to reactive storytelling, live content creation, and maybe even real-time game cinematic generation. Imagine directing a film or music video… with no render time.

4. CameraCtrl-II: Direct the Camera Inside a 3D World

This feature turns Seaweed-7B into a pseudo-game engine.

ByteDance describes it as:

“Generating high-quality dynamic videos with conditional camera poses… effectively transforming video generative models into view synthesizers.”

Translation: you can control the camera’s motion inside a 3D space, similar to how you'd direct a shot in Unreal Engine. The model builds the world, then lets you choose your camera moves — pans, zooms, tilts, and more — with real 3D consistency.

It uses a method called FLARE to extract 3D point clouds from frames, enabling full 3D reconstruction. Wild.

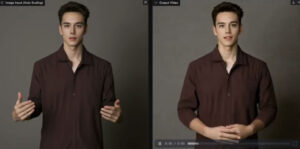

5. Improved Motion & Physics

Let’s be real: most AI models still fumble when it comes to fluid, believable movement — especially with complex actions like figure skating, spinning, or coordinated dance.

Seaweed-7B doesn’t solve all those problems, but it shows clear progress. Motions are smoother, physics are more grounded, and there’s better consistency across frames — especially in scenes with rapid movement or environmental interaction.

So… Can You Use It Yet?

Not quite. Seaweed-7B appears to still be closed-access, and ByteDance has been teasing it for months. But don’t get too caught up in availability.

Everything you see here — from native audio-video generation to in-model camera directing — is a preview of what’s coming to every major AI video platform.

Seaweed-7B isn’t just a model. It’s a signal.

The next generation of AI video tools is about:

- Longer formats

- Real-time rendering

- Built-in sound

- 3D world navigation

- And cinematic control like never before

We’re entering the age of AI filmmaking as a solo sport — and ByteDance just took a major leap forward.

Validate your login

Sign In

Create New Account