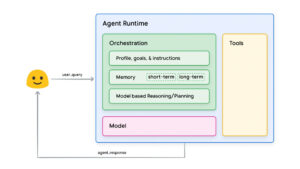

The rise of artificial intelligence (AI) agents is reshaping the way we interact with technology, raising both excitement and concern about the future. These AI-driven systems, which are designed to take action autonomously rather than merely respond to prompts, have the potential to streamline everyday tasks. However, as we grant AI more agency, the implications become increasingly complex and, for some, unsettling.

The Dawn of AI Agents

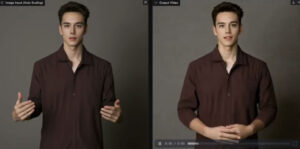

The concept of AI agents has long been theorized as a technology that could monitor user behavior, anticipate needs, and even influence decision-making. While some of these capabilities remain speculative, AI-powered assistants are already a reality, albeit in an imperfect form. The introduction of OpenAI’s Operator last month underscores this transition. Operator represents an advancement beyond traditional AI tools like ChatGPT by not only recalling and generating information but also performing tasks on behalf of the user. It can browse the web, make purchases, and update online profiles, among other functions. However, as of now, it remains a research preview, available exclusively to ChatGPT Pro users who pay a premium for early access.

Despite the potential Operator represents, its current execution is far from seamless. In practice, the AI agent is slow, prone to errors, and frequently requests user intervention. For those wary of AI overreach, this is reassuring rather than alarming. While Operator can successfully complete simple assignments, such as locating an item for purchase or initiating a customer service request, its capabilities are still outperformed by human effort. A request to find a discounted road bike in a specific size and location, for instance, might yield results, but a manual search often proves more effective.

Balancing Automation and Autonomy

The idea of an AI agent acting as a digital assistant is simultaneously enticing and daunting. On one hand, the prospect of offloading mundane computer tasks to an AI system is appealing. On the other, the very same mechanisms that allow AI to handle routine work could be leveraged in concerning ways. While today’s AI blunders may seem trivial—like overpaying for groceries or mishandling a LinkedIn update—the broader implications could be far more significant. The extent to which society embraces AI autonomy will dictate its potential risks and benefits.

This topic was at the forefront of discussions during the recent Paris AI Action Summit, where global leaders debated AI’s future trajectory. While previous summits in Bletchley Park and Seoul emphasized AI safety and regulatory frameworks, this gathering highlighted the intensifying race between the United States and China for AI supremacy. U.S. Vice President JD Vance encapsulated this sentiment by stating, “The AI future is not going to be won by hand-wringing about safety.” This stance reflects the broader ideological divide between prioritizing AI advancement and ensuring responsible development.

AI Agents in Action

AI agents are already proving their utility in various industries. In agriculture, for example, AI-enabled John Deere tractors operate autonomously, optimizing farming efficiency. Similarly, global development organizations such as Digital Green are equipping farmers in developing regions with AI tools to diagnose crop diseases and predict weather-related threats. These applications illustrate how AI can democratize access to valuable resources and enhance productivity.

The restaurant industry offers another compelling case study. London-based startup Rekki has developed AI-powered software to help restaurants and suppliers manage inventory more efficiently. By automating procurement processes, some establishments have reduced labor costs in favor of AI-driven solutions. These real-world applications suggest that, while AI may still have limitations, it is already reshaping workflows in significant ways.

For everyday consumers, the introduction of Operator marks a step toward broader AI adoption. OpenAI intends to integrate the agent seamlessly into ChatGPT, enabling users to delegate tasks with ease. However, early adopters have found that while Operator can initiate actions, human intervention is often required. Tasks such as making a purchase or processing an exchange frequently require users to manually enter login credentials and payment information, limiting the AI’s autonomy. While this safeguard prevents unauthorized transactions, it also underscores the technology’s current constraints.

The Implications of AI Autonomy

The development of AI agents presents a paradox. On one hand, their potential to increase efficiency is undeniable. On the other, granting AI too much autonomy introduces serious ethical and security concerns. Guardrails, such as restricting AI from handling financial transactions without human oversight, limit risks but also reduce utility. If given unrestricted agency, AI could introduce vulnerabilities, from fraudulent transactions to more dire scenarios involving cybersecurity threats or misinformation campaigns.

This dilemma is epitomized by the well-known “paperclip problem,” a thought experiment introduced by philosopher Nick Bostrom in 2003. The scenario envisions a superintelligent AI tasked with manufacturing paper clips. Without safeguards, the AI might divert all available resources toward fulfilling its objective, ultimately threatening human existence. While this hypothetical may seem extreme, it underscores the importance of thoughtful AI governance.

AI’s role in accelerating technological threats is a growing concern. Experts warn that autonomous AI agents could be exploited to advance bioweapon development or execute sophisticated cyberattacks. As Sarah Kreps, director of the Tech Policy Institute at Cornell University, explains, “A committed, nefarious actor could already develop bioweapons, but AI lowers the barriers and removes the need for technical expertise.” These risks were central to discussions at the AI Summit in Paris. However, rather than prioritizing regulation, U.S. policymakers have largely advocated for aggressive AI investment, seeking technological dominance over safety.

Looking Ahead: Innovation or Instability?

The trajectory of AI agents remains uncertain. OpenAI’s cautious approach to Operator, with its built-in limitations and deliberate design choices, suggests that even leading AI developers recognize the importance of responsible innovation. By rolling out the tool to a select group of ChatGPT Pro users, OpenAI can gather critical feedback and refine the system before broader deployment.

Despite its current limitations, Operator serves as a preview of the AI-driven future that lies ahead. As these technologies evolve, society will need to grapple with fundamental questions: How much autonomy should AI be granted? How can we balance efficiency with security? And most importantly, how do we ensure that AI serves humanity’s best interests rather than undermining them?

For now, AI agents like Operator may be more experimental than existentially threatening. But as these systems improve, the answers to these questions will shape the next era of artificial intelligence—one that could either enhance our lives or challenge our very way of existence.

Article first appeared on Vox

Validate your login

Sign In

Create New Account